Recently for Project Rapture, I have created a modular and very easy to use audio system.

The audio system I created is driven by Unreal's Data Assets, which can be easily created by designers and artists alike. My goal with this audio system was to create an inexpensive, yet very simple to use system that allows for any item, interactable, NPC, and so on, to use a single Data Asset that can store and play any type of sound in the game. This system also supports Unreal's Sound Base as well as Wwise AkEvents.

For instance, if I have a weapon that has sounds for reloading, firing, empty firing, suppressed firing, and so on, I can make a single Data Asset that will store all of these sounds and can be played through Blueprints or C++.

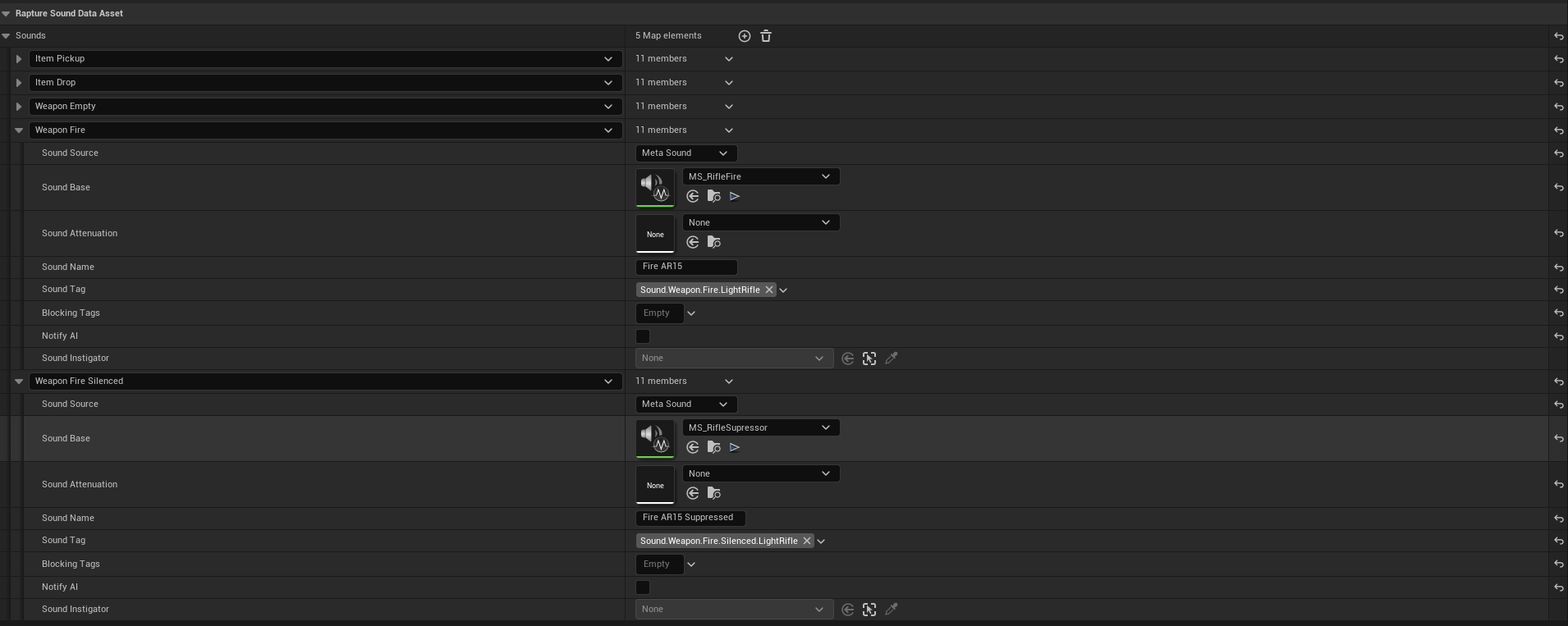

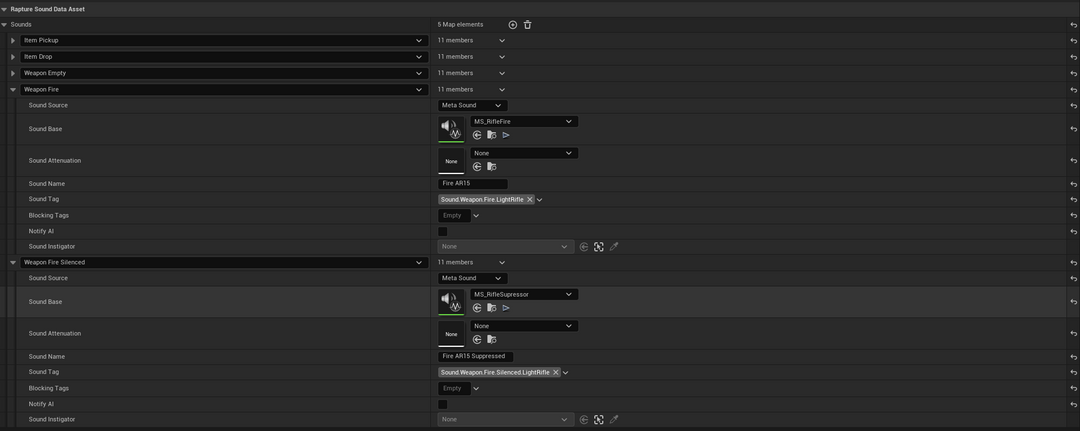

Here I have a Data Asset for a sound for an AR15 rifle. This asset stores sounds for the item pickup, item drop, weapon empty, weapon fire, and weapon fire silenced. As you can see there are several parameters for each sound, and each of these parameters are fully supported by the system. If I wanted to play this sound at a specific location, I can do that. If I want to play this sound wherever this object is located in the world, I can do that too.

Example Sound Asset

When creating this system, the biggest goal I had to was to make it as easy as possible for designers and other people on the project to implement sounds as easily as possible. Furthermore, to make it even easier on my collaborators, I created custom K2 nodes that ensure that when attempting to play a sound in Blueprints, that it warns the user that if they are trying to play a sound that isn't supported by the sound type or sound tag.

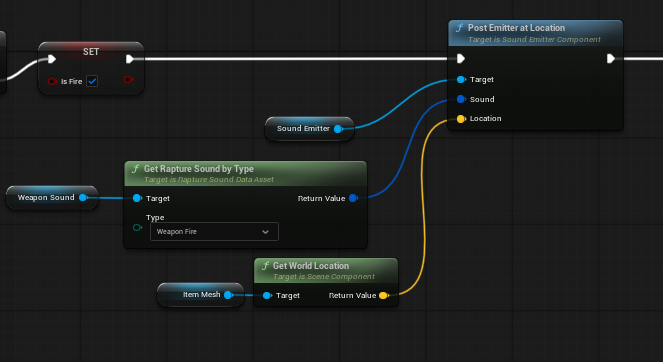

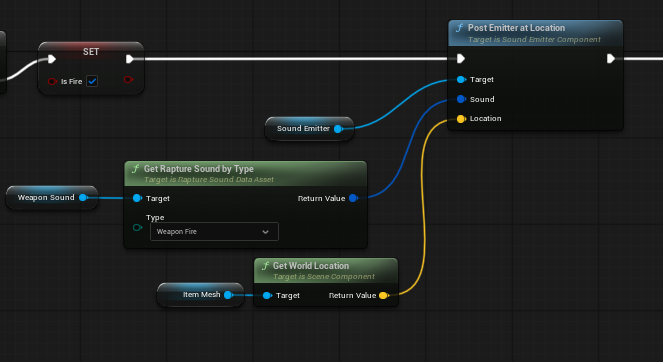

Here is an example of playing a sound through Blueprints.

Plays the weapon fire sound assigned to this weapon at the Item Mesh's location.

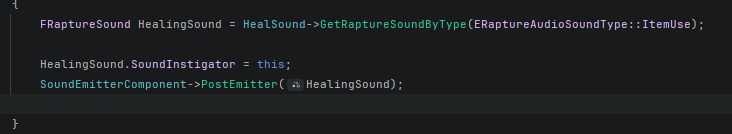

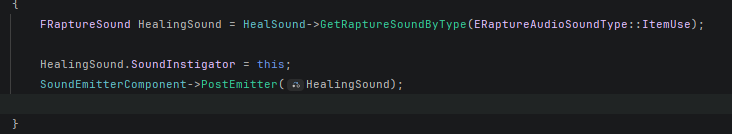

Here is an example of playing a sound through C++.

Plays the ItemUse sound assigned.

Lastly, I would like to talk about the customizable parameters and properties for each of these sounds.

Since our game will have a lot of audio and AI reactivity to audio, I wanted to ensure that each sound has the capability of notifying AI when it is played. Each parameter has its own functionality and reasoning behind it, and each can affect how an AI reacts to the sound. For instance, the sound attenuation property will determine how far this sound will attenuate to nearby enemies. If the enemy is within the sound's attenuation range, it will notify the AI and they will react accordingly.

I am very proud of this system, and I am very excited to get some input on how I designed it. I will post a link below to the documentation I wrote on this system.